“Social learning. Our species is the only one that voluntarily shares information: we learn a lot from our fellow humans through language.

“ In our brains, by contrast, the highest-level information, which reaches our consciousness, can be explicitly stated to others. Conscious knowledge comes with verbal reportability: whenever we understand something in a sufficiently perspicuous manner, a mental formula resonates in our language of thought, and we can use the words of language to report it. ”

One-trial learning. An extreme case of this efficiency is when we learn something new on a single trial. If I introduce a new verb, let’s say ”

“To learn is to succeed in inserting new knowledge into an existing network.”

--- Stanislas Dehaene. “How We Learn.”

The combination of the two modes of learning creates a cascading effect.

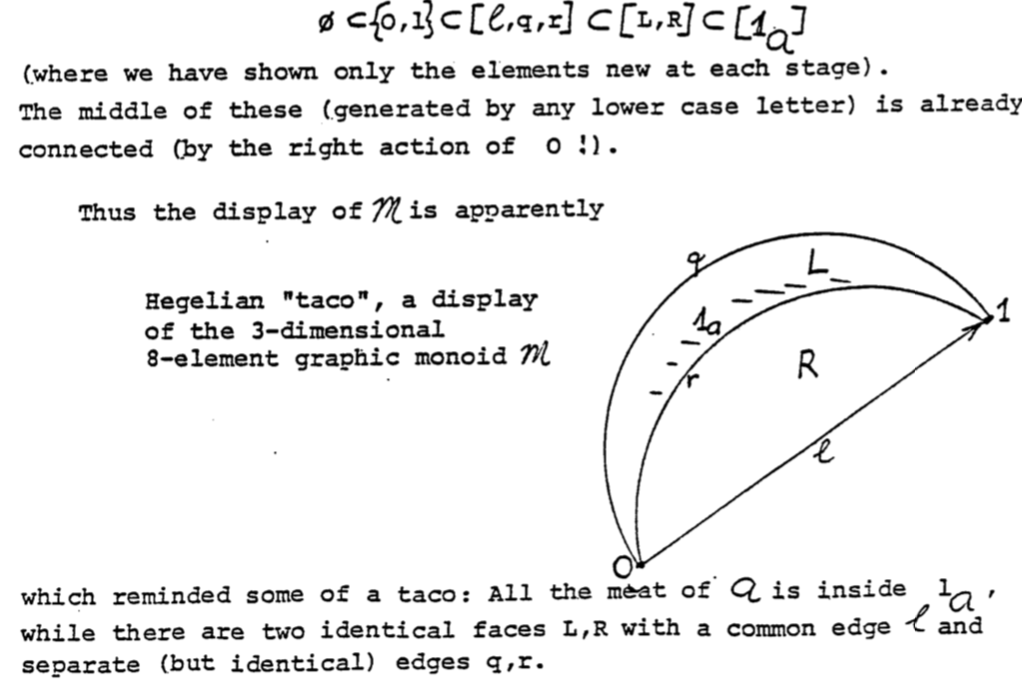

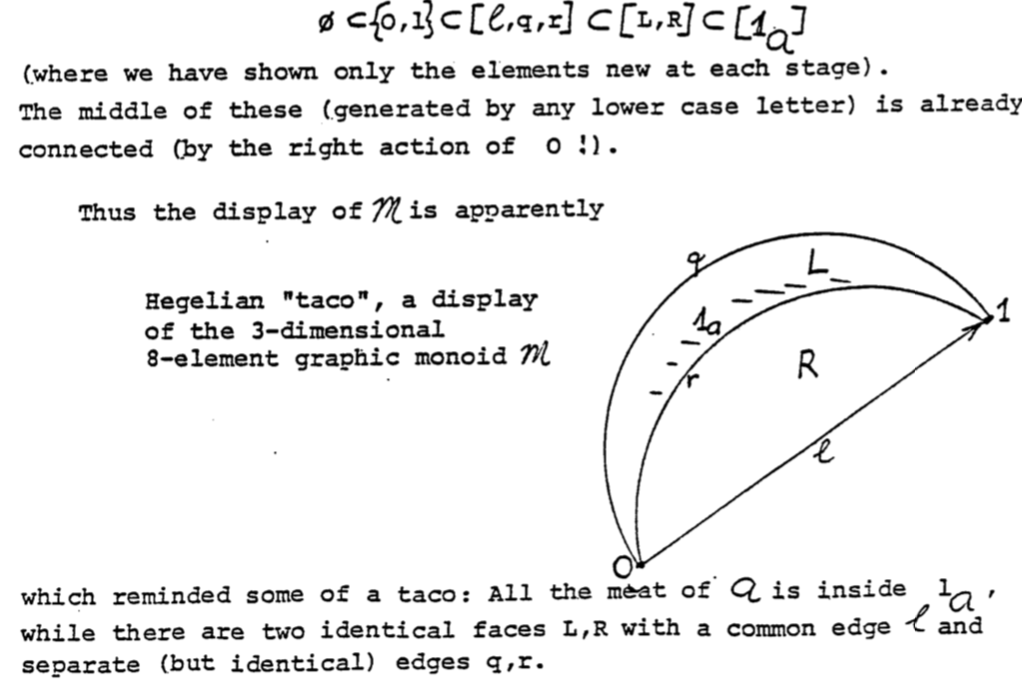

We can model this as a change of state, e.g. learning-by-doing by a pair of individuals, wherein the first one is doing, while the second one ("the soul") is learning. That is, TLRRH starts naive and dies while going to her grandma. At the same time, we, the observers, learn from her one-time misfortune and pass this learning to future generations.

Also related: fool me once, shame on you; fool me twice, shame on me.