(no subject)

Jan. 31st, 2026 07:35 pm

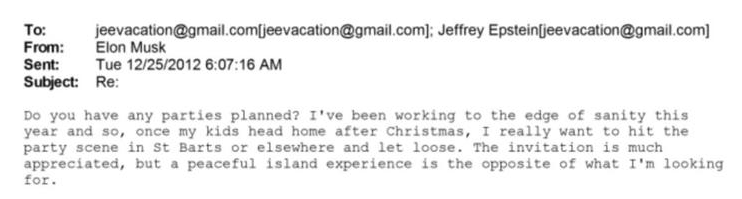

Moral or, more precisely, immoral issues aside, Epstein ran a successful service business where he provided his customers, aka "friends", with exactly what they wanted when they wanted it. He also assumed legal risks, which the customers appreciated. That aspect of the business ultimately led to his incarceration and death.

Madame Hollywood was convicted in the 1990s, so Epstein learned from her mistakes and located his high-end sex services center on an island, not in a large city like LA where the word would eventually get out.

I wonder who provides the services now and how they deal with risks.